Run a single unit test with Mocha/Chai

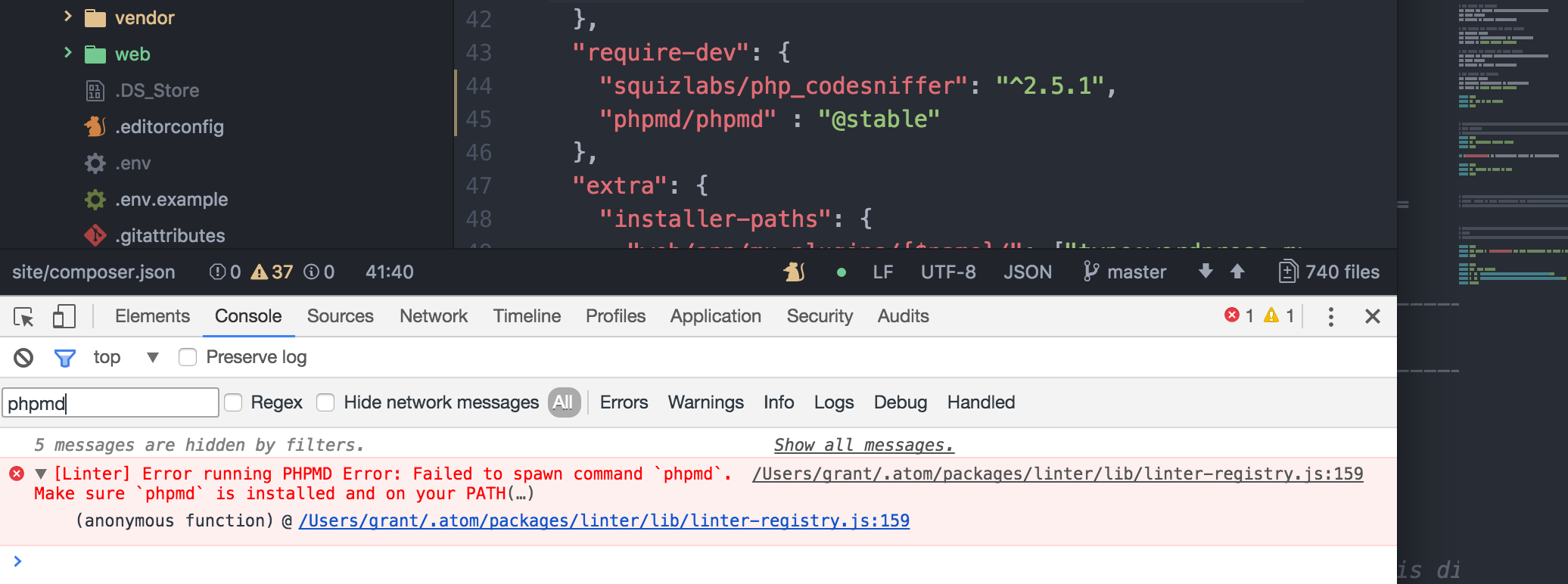

Sure, you can add file globbing patterns to a CLI arg to run a single JavaScript test, or group of tests, but it’s not super convenient and often requires a trip to your README to remember how to do it. Here’s a quicker way.