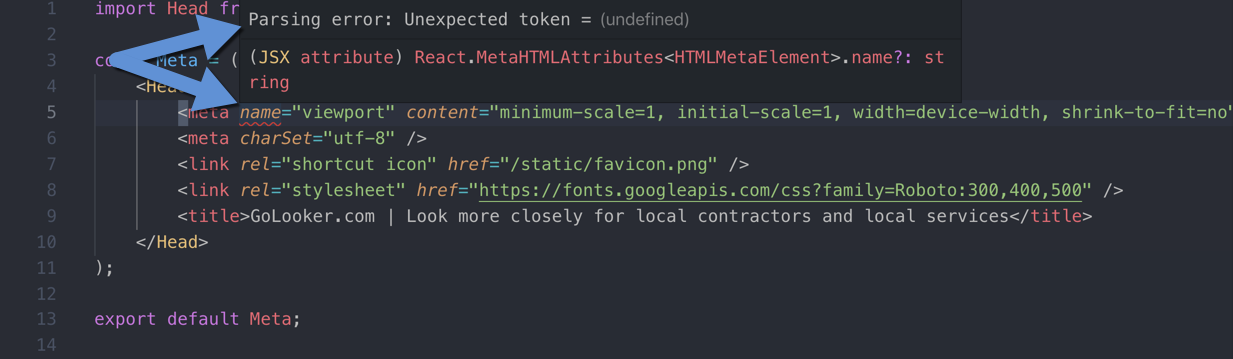

Errors & warnings while upgrading Terraform 0.12 to 0.14

While consolidating a handful of my sites from various hosting providers, I wanted to add those domains to my Cloudflare account to protect them from malicious traffic & improve their performance. At the time this post was written, 0.14 is the latest version – but I was still on v0.12 which I had installed with a […]