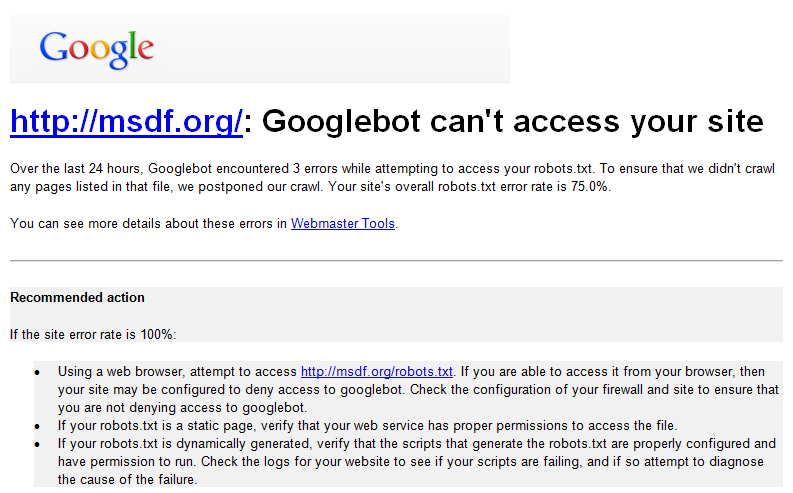

Yesterday, many Google Webmaster Tools users received unpleasant notifications that their websites were suddenly inaccessible to Google. After 2 hours of aggressive troubleshooting last night, and another couple hours spent this morning, it seems that this may be an issue on Google’s side. Search Engine Roundtable just posted an article confirming more reports of problems with Google accessing robots.txt.

In my own case, the naked msdf.org/robots.txt URL is accessible from every other browser, device, and third-party tool in my arsenal, yet Google has about a 80% error rate in accessing my robots.txt file. While the www version is working perfectly with no crawl errors or problems fetching as Googlebot, the non-www version is having much less success. (Please note, you may also receive duplicate “Googlebot can’t access your site” errors for both www and non-www versions.)

Attempting to use the Fetch as Google tool within Webmaster Tools was helpful in understanding the problem, but ultimately the problem seems to be with Google, and your site’s index status is likely just fine. (Whew!) But use caution in writing off warnings from Google, you could very well be receiving these email warnings for good reason, especially if you received them before yesterday (on or before April 25, 2013).

Matt Cutts commented on the Google forum discussion earlier, acknowledging this could be an issue with Google, so I recommend you check that forum thread out. I’ll keep an eye on this until a resolution comes around, but if you’ve already tested your site in the Fetch as Google tool and all other bots are working normally, you may actually be able to do something you almost never (ever) want to do – ignore a Google Webmaster Tools alert.

UPDATE (2013-Apr-27)

Google’s John Mueller has indicated that this issue should now be resolved. https://productforums.google.com/d/msg/webmasters/mY75bBb3c3c/ARQqAWOf_6YJ